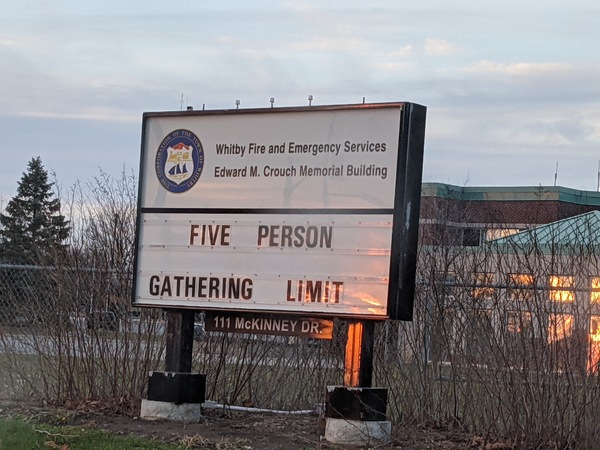

Dear reader, I am writing this from my bunker in lockdown. Here we are on the final day of 2020, and all I can say is, whew, we made it.

The front page of this blog goes back a year and a half which doesn’t say a lot about my level of commitment to this here writing thing of late. I shall try to make up for that by summarizing some of the projects I actually completed in 2020, but which I was too lazy to document contemporaneously so they might as well have not happened at all. Well here’s your bunch of write-ups all squashed into one blog entry, happy now?

I celebrated my first anniversary at Amazon in October, a year which saw us ship a huge cross-team project and deliver an outage-free Christmas. If you are one of the millions who interacted with Alexa this year, my many colleagues and I helped make that happen. If Alexa responded with something completely nonsensical or useless, well, then, that was probably some other team’s fault.

I’m happy to still be working in software, 22 years since I took my first full-time job. Back then, I took one of my first paychecks to the local hi-fi stereo vendor and purchased a Paradigm home theater setup. My roommate and I didn’t have any furniture to speak of, but who needs that when you can watch VHS tapes in 5.1 surround on a giant tube TV! These days, the surrounds and center channel speakers are gathering dust, but I still use the bookshelf speakers and sub. Recently, I noticed these poor old Atoms were rattling whenever the bass kicked in. Youtubers said that this is common and you need to replace your foam surrounds and you can buy a kit and do it yourself and did you know that you could just buy a new pair of monitors for as little as $5000 and also get some gold plated optical cables while you are at it for the warmest possible digital sound. So yes, I did buy such a kit and I did do it myself.

Well, this was an epically bad glue job, but there are no longer any clicks while listening to Technotronic’s _Pump Up The Jam_, so we are good for another 20 years or so.

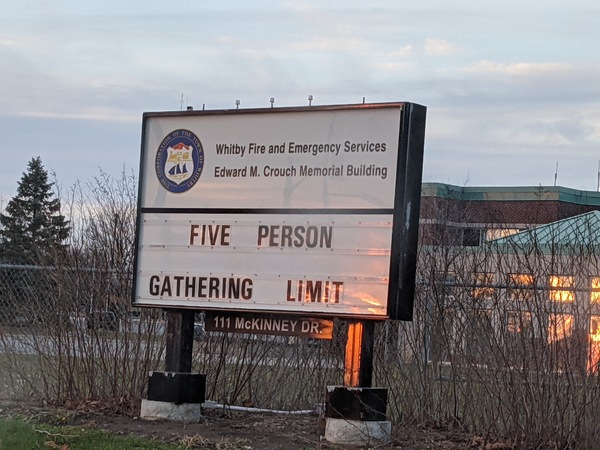

Dining: for those that don’t know, in 2020, we were hit with a global SARS-Cov-2 pandemic. Never a family to eat out much anyway, we cut out the few remaining visits to eateries in the interest of not dying. The odd craving did strike though, so I fashioned a cheesecake, bagels, fried chicken, lattes, and doughnuts _with my bare hands_!

As in previous years, I grew a garden over the summer. The new-to-me crops: cucumbers and potatoes. Of the former, I had a lot: I ended up with something like ten pints of pickles even after having cukes in salads every day. I had only a few potatoes, but was surprised to find that the home grown varieties had a much different, nuttier taste than supermarket spuds. Both will probably make an appearance next year but I’ll need to balance out the yields. Also ended up with quite a few jalapenos which turned into a dozen jars of pepper jelly, and the usual amount of tomatoes (sauces, paste, pizza toppers, and so on). All this despite a family of rabbits literally living in my raised bed.

Anyway, this is all I can remember doing in 2020, or at least those things I have pictures of. Here’s hoping we get some vaccines in 2021 and we can go outside again. Wake me when that happens.