The entire sum of my machine learning experience thus far is a couple of courses in grad school, in which I wrote a terrible handwriting recognizer and various half-baked natural language parsers. As luck would have it, I was just a couple of years too early for the Deep Learning revolution — at the time support vector machines were all the rage — so I’ve been watching the advancements of the last few years with equal measures idle interest and bewilderment. Thus, when I recently stumbled across the fast.ai MOOC, I couldn’t resist following along.

I have to say I really enjoy the approach of “use the tools first, then learn the theory.” In the two days since I started the course, I already built a couple of classifiers and got very good results, much more easily than with my handwriting recognizer of yore.

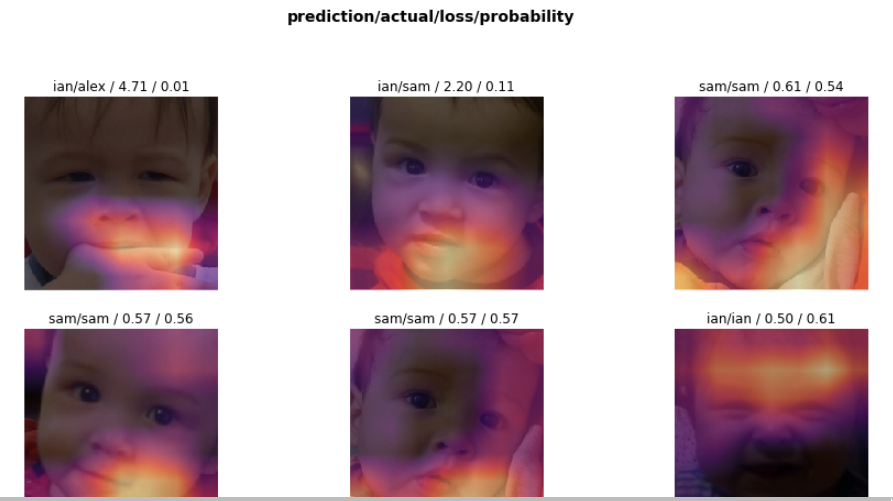

My first model was trained on 450 baby pictures of my children and achieved 98% accuracy. Surprisingly, the mistakes did not confirm our priors, that Alex and Sam look most similar as babies — instead it tended to confuse them both with Ian. The CNN predicts that I am 80% Alex. Can’t argue with that math.

The second classifier was trained on Pokemon, Transformers, and Jaegers (giant robots from Pacific Rim). This gets about 90% accuracy; not surprisingly, it has a hard time telling apart the robot classes, but has no trouble picking out the Pokemons.

I’m still looking for a practical application, but all in all, it’s a fun use for a GPU.