As I wrote somewhere in the Metaverse, I’m running the wireless-testing tree now, which is about as minimal a contribution as one could make. It essentially means running a script once daily; cron could do it all, if not for the part where I enter my ssh passphrase to actually push out the updates. For the curious, here’s the script.

One of the killer features of git used here is git rerere, which remembers conflict resolutions and reapplies them when reencountered. A human (me) still needs to resolve the conflict once, but after that it’s pretty much on autopilot for the rest of that kernel series. Normally one should reinspect the automatic merge made by git-rerere, but at least for this testing tree, throwing caution to the wind is warranted and thus the script has some kludges around that.

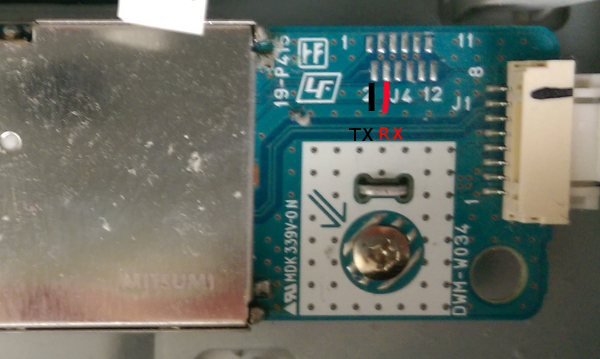

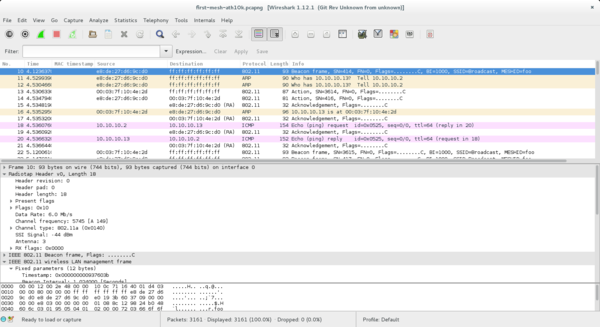

Every pushed tag had a successful build test with whatever (not claimed to be exhaustive) kernel config I am using locally. I’ve considered some more extensive tests here, such as running the hostapd test suite (takes an hour with 8 VMs on my hardware) or boot testing on my mesh APs (requires a giant stack of out-of-tree patches), but, given the parentheticals, I’m keeping it simple for now.

Observant persons may have noted some missing dates in the tags. Generally, this means either nothing actually changed in the trees I’m pulling, or it is during the merge window when things are too in flux to be useful. Or maybe I forgot.