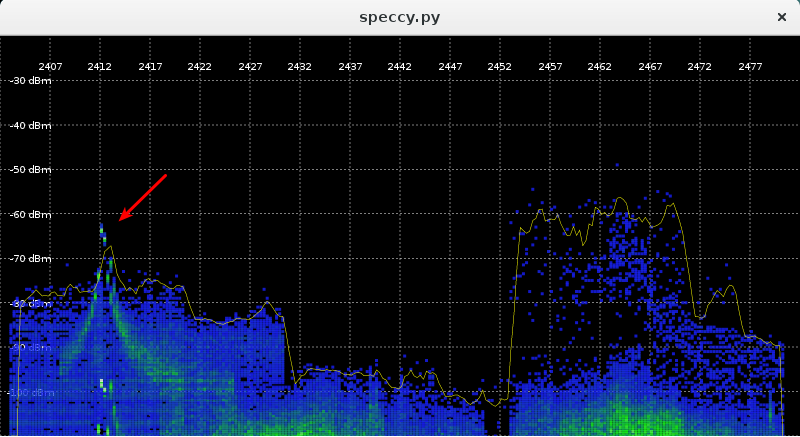

I will admit to being an insufferable word nerd: the kind of person who will intentionally make a pun and then ruin it by acknowledgment. My wife is the sufferable sort who, presented with the same joke, groans outwardly and inwardly at the same time. Together, we have a shared appreciation for the crossword puzzle, and can be found on the porch filling out a grid on the rare occasion that a lazy summer afternoon presents itself. We can usually get through a Thursday or Sunday NYT puzzle, but we aren’t going to be entering any speed solving contests.

A few months ago, I heard about Qxw, a crossword construction software for Linux. As a puzzle solver, the art of creating the puzzle seemed mysterious and left to those far smarter than myself, but any old dumb person can run some software. I finally tried it out yesterday, and here’s the result.

This is an 11×11 puzzle; newspaper puzzles are usually 15×15 but this was my first try so wanted to keep it easy-ish and constructable in a few hours. For the uninitiated, there are a few rules that help guide one into picking a layout: generally there are a few (~3) longish answers that form a theme; the layout is diagonally symmetric; the smallest answers are 3 characters. Taken together, this means two of the theme answers are usually 3-4 rows from the top and bottom, of the same length and in the same relative location, while the third theme answer is in the middle. Additional black boxes are sprinkled about to break up extra-long words.

It turns out that Qxw didn’t actually help much besides automatically placing the mirrored black squares: once I filled in my theme answers and blacked off part of the grid, Qxw couldn’t fill any other spaces. I suspect expanding the dictionary beyond /usr/share/dict/words would help a lot in this respect. Instead I occasionally used egrep on the dictionary file with suitable regexes to find candidate words and resorted to some abbreviations and prefixes. I did cheat a few times by googling some combination of letters that I hoped would be a thing (1-down, 6-down, 35-down). I need to improve my 3-letter word vocab.

Things I did do that annoy me as a solver:

- Fairly obscure names or characters (35-down, at least to me, a non-Trekkie)

- Use of old stand-bys (10-, 14-across, I burned these quickly!)

- Weak multi-word answers (9-down)

- Foreign words (1-down)

Despite the flaws, Angeline solved it in about 10 minutes while watching TV; she also supplied a much better clue for 25-across which I have appropriated.

I’d say this effort gets a B-, but it was an interesting exercise to do the puzzle from the other side. 13×13 next.