Having successfully shipped a project at $dayjob after some extended crunch time, I took this week off to recharge. This naturally gave me the opportunity to, um, write more code. In particular, I worked a bit on my crossword constructor while also constructing a crossword. I’m a bit rusty in this area, so while I was able to fill a puzzle with a reasonable theme, I’m probably going to end up redoing the fill before trying to publish that one because some areas are pretty yuck.

Which brings me to computing project number two: a neural net that tries to grade crosswords. Now, I can and have done this using some composite scores of the entries, based on some kind of word list rankings, but for this go-round I thought it would be fun to emulate the semi-cantankerous NYT crossword critic, Rex Parker. Parker (his nom de plume) is infamous for picking apart the puzzle every day and identifying its weak spots. Some time ago, Dave Murchie set up a website, Did Rex Parker Like The Puzzle, which, as the URL suggests, gives the short-on-time enthusiast the Reader’s Digest version. What if we had, say, wouldrexparkerlikethepuzzle.com: would this level of precognition inevitably lead us into an apocalyptic nightmare, even worse than the one we currently inhabit? Let us throw caution into the wind like so many Jurassic Park scientists and see what happens.

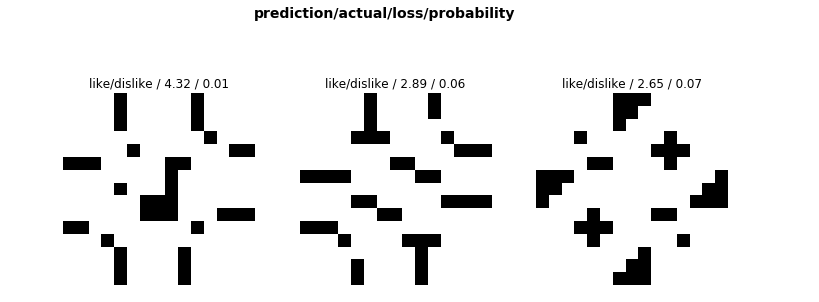

I didn’t do anything so fancy as to generate prose with GPT-3; instead I just trained a classifier using images of the puzzles themselves. Maybe, thought I, a person (and therefore a NN) can tell whether the puzzle is good or bad just by looking at the grid. Let’s assume Rex is consistent on what he likes — if so we could use simple image recognition to tell whether something is Rex-worthy or not. Thanks to Murchie’s work, I already had labels for 4 years of puzzles, so I downloaded all of those puzzles and trained an NN on them, as one does.

I tried a couple of options for the grid images. In one experiment, I used images derived from the filled grids, letters and all; in another, I considered only the empty grid shape itself. It didn’t make much difference either way, which suggests the language aspect of the puzzle is not really useful or adequately captured by the model.

How well did it work? Better than a coin flip, but not by a lot.

When trained with filled grids, it achieved an accuracy of 58.7%. When trained with just the grid shape, it achieved an accuracy of 61.4%.

Both models said he would like today’s (10-31-2020) puzzle, about which he was actually fairly ambivalent. My guess is the model is really keying in on number of black squares as a proxy for it being a Friday or Saturday puz, which he tends to like better than any other day of the week and therefore this one was highly ranked. Probably just predicting on number of squares would have performed similarly.